End to End Guide: Converting and Benchmarking a Model¶

These steps will walk you through deploying a BNN with LCE. The guide starts by downloading, converting and benchmarking a model from Larq Zoo, and will then discuss the process for a custom model.

Picking a model from Larq Zoo¶

This example uses the QuickNet model from the sota submodule of larq-zoo. First, install the Larq Ecosystem pip packages:

pip install larq larq-zoo larq-compute-engine

import larq as lq

import larq_compute_engine as lce

import larq_zoo as lqz

# Load the QuickNet architecture and download the weights for ImageNet

model = lqz.sota.QuickNet(weights="imagenet")

lq.models.summary(model)

model.save("quicknet.h5")

quicknet.h5 file you just saved, you'll see that it is around 42 MiB in size. This is because the model is currently unoptimized and the weights are still stored as floats rather than binary values, so executing this model on any device wouldn't be very fast at all. Converting the model¶

Larq Compute Engine is built on top of TensorFlow Lite, and therefore uses the TensorFlow Lite FlatBuffer format to convert and serialize Larq models for inference. We provide our own LCE Model Converter to convert models from Keras to flatbuffers, containing additional optimization passes that increase the execution speed of Larq models on the supported target platforms.

Using this converter is very simple, and can be done by adding the following code to the python script above:

# Convert our Keras model to a TFLite flatbuffer file

with open("quicknet.tflite", "wb") as flatbuffer_file:

flatbuffer_bytes = lce.convert_keras_model(model)

flatbuffer_file.write(flatbuffer_bytes)

quicknet.tflite file with compressed weights and optimized operations, which is only just over 3 MiB in size! Benchmarking¶

This part of the guide assumes that you'll want to benchmark on an 64-bit ARM based system such as a Raspberry Pi. For more detailed instructions on benchmarking and for benchmarking on Android phones, see the Benchmarking guide.

On ARM, benchmarking is as simple as downloading the pre-built benchmarking binary from the latest release to the target device and running it with the converted model:

Warning

The following code should be executed on the target platform, e.g. a Raspberry Pi. The exclamation marks should be removed, but are necessary here to make this valid notebook syntax.

!wget https://github.com/larq/compute-engine/releases/download/v0.4.2/lce_benchmark_model_aarch64 -o lce_benchmark_model

!chmod +x lce_benchmark_model

!./lce_benchmark_model --graph=quicknet.tflite --num_runs=50 --num_threads=1

Inference (avg), which in this case is 31.4 ms (31420.6 microseconds) on a Raspberry Pi 4B. To see the other available benchmarking options, add --help to the command above.

Create your own Larq model¶

Instead of using one of our models, you probably want to benchmark a custom model that you trained yourself. For more information on creating and training a BNN with Larq, see our Larq User Guides. For best practices on optimizing Larq models for LCE, also see our Model Optimization Guide.

The code below defines a simple BNN model that takes a 32x32 input image and classifies it into one of 10 classes.

import tensorflow as tf

# Define a custom model

model = tf.keras.models.Sequential(

[

tf.keras.layers.Input((32, 32, 3), name="input"),

# First layer (float)

tf.keras.layers.Conv2D(32, kernel_size=(5, 5), padding="same", strides=3),

tf.keras.layers.BatchNormalization(),

# Note: we do NOT add a ReLU here, because the subsequent activation quantizer would destroy all information!

# Second layer (binary)

lq.layers.QuantConv2D(

32,

kernel_size=(3, 3),

padding="same",

strides=2,

input_quantizer="ste_sign",

kernel_quantizer="ste_sign",

kernel_constraint="weight_clip",

use_bias=False # We don't need a bias, since the BatchNorm already has a learnable offset

),

tf.keras.layers.BatchNormalization(),

tf.keras.layers.Activation("relu"),

# Third layer (binary)

lq.layers.QuantConv2D(

64,

kernel_size=(3, 3),

padding="same",

strides=2,

input_quantizer="ste_sign",

kernel_quantizer="ste_sign",

kernel_constraint="weight_clip",

use_bias=False # We don't need a bias, since the BatchNorm already has a learnable offset

),

tf.keras.layers.BatchNormalization(),

tf.keras.layers.Activation("relu"),

# Pooling and final dense layer (float)

tf.keras.layers.GlobalAveragePooling2D(),

tf.keras.layers.Dense(10, activation="softmax"),

]

)

lq.models.summary(model)

# Note: Realistically, you would of course want to train your model before converting it!

# Convert our Keras model to a TFLite flatbuffer file

with open("custom_model.tflite", "wb") as flatbuffer_file:

flatbuffer_bytes = lce.convert_keras_model(model)

flatbuffer_file.write(flatbuffer_bytes)

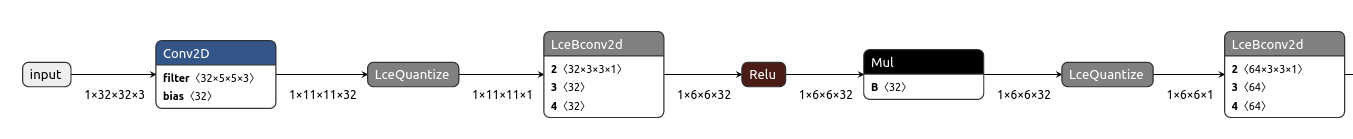

There is an unexpected Mul operation between the two binary convolutions, fused with a ReLU. Since BatchNormalization and ReLU can be efficiently fused into the convolution operation, this indicates that something about our model configuration is suboptimal.

There are two culprits here, both explained in the Model Optimization Guide:

-

The order of

BatchNormalizationandReLUis incorrect. Not only does this prevent fusing these operators with the convolution, but since ReLU produces only positive values, the subsequentLCEQuantizeoperation will turn the entire output into ones, and the network cannot learn anything. This can be easily fixed by reversing the order of these two operations:# Example code for a correct ordering of binary convolution, ReLU and BatchNorm. lq.layers.QuantConv2D( 32, kernel_size=(3, 3), padding="same", strides=2, input_quantizer="ste_sign", kernel_quantizer="ste_sign", kernel_constraint="weight_clip", use_bias=False # We don't need a bias, since the BatchNorm already has a learnable offset ) tf.keras.layers.Activation("relu") tf.keras.layers.BatchNormalization() -

However, if you change the model definition above to incorporate these changes, the graph looks like this:

Which is even worse, because there are now two unfused operations (

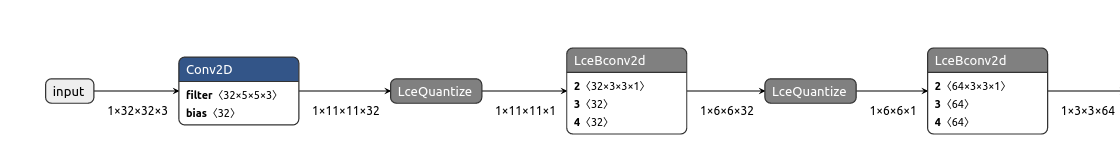

ReLUandMul) instead of one (Mulwith fusedReLU).This is because while the binary convolutions use

padding="same", no padding value was specified and therefore the default value of 0 is used. Since binary weights can only take two values, -1 and 1, this 0 cannot also be represented in the existing input tensor, so an additional correction step is necessary and theReLUcannot be fused. This can be resolved by usingpad_values=1for the binary convolutions:# Example code for a fusable configuration of a binary convolution with "same" padding, including ReLU and BatchNorm. lq.layers.QuantConv2D( 32, kernel_size=(3, 3), padding="same", pad_values=1, strides=2, input_quantizer="ste_sign", kernel_quantizer="ste_sign", kernel_constraint="weight_clip", use_bias=False # We don't need a bias, since the BatchNorm already has a learnable offset ) tf.keras.layers.Activation("relu") tf.keras.layers.BatchNormalization()

The ReLu and BatchNormalization operations have now successfully been fused into the convolution operation, meaning the inference engine just has to execute a single operation instead of three!